Understand the Confusion Matrix

Blow away the confusion

You’ve created a classification model and come across a new concept called confusion matrix. However tough it may seem, a classification model evaluation is not complete unless you add in your confusion matrix.

Listen – this post explained

What is a confusion matrix?

A confusion matrix is commonly, a 2x2 matrix for a binary classification problem containing numbers pertaining to the classification. It becomes an nxn matrix for an n class classification problem. What are these numbers and how do they come about? Let us take the example of a binary classification problem.

Let us take a contrived example of a parts bin which has both defective and good parts. We have a classifier that will detect defective parts based on certain parameters that will be part of a dataset. We need to evaluate how good the classifier is. Note that these parts are labelled good or defective so that we can evaluate the classifier prediction against the true label.

We will assume a total of 100 parts of which 60 are good and 40 are defective. Since our goal is to identify defective parts, we label them as 1.

Building the confusion matrix

Let us build the confusion matrix in two steps

Starting point

Before we bring in the classifier, this is how things look:

1 40

True label ----------

0 60

Bringing in the classifier

As we bring in the classifier, it classifies our parts as good or defective. Ideally, we would expect our classifier to correctly identify all the good parts and only the good parts correctly. However, no classifier is perfect and that is the whole point of the evaluation. Our classifier makes mistakes too.

After this exercise, we have two versions of good and defective – one which is the true label and another is the one as labelled by the classifier. To represent this, we can split the above table vertically, giving us four cells, one each for a combination of the true and predicted label.

Let’s assume our classifier has predicted the labels. We can then represent this prediction along with the true labels as follows:

1 30 | 10

True label ----|----

0 20 | 40

1 0

Predicted Label

This is nothing but the confusion matrix.

What do these numbers mean?

To understand what these numbers mean, let us superimpose the following legend on our confusion matrix above.

1 TP | FN

True label ----|----

0 FP | TN

1 0

Predicted Label

In the above confusion matrix, the criss-crossing of the true label (1,0) along with the predicted label (1,0) gives rise to four values: True Positives for (1,1), True Negatives for (0,0), False Positives for (0,1) and False Negatives for (1,0).

From our confusion matrix, we can deduce that:

- The classifier has correctly identified 30 defective parts(i.e. true defective and classified as defective). These are the true positives.

- However, it has not been able to identify 10 parts as defective. Rather, they have been falsely classified as good parts. These are the false negatives.

- Looking at the cell corresponding to the labels (0,0), we observe that we have correctly classified 40 parts as belonging to class 0 (good parts). These are our true negatives.

- Again, the figure 20 (cell (0,1)) tells us that 20 good parts have been falsely identified as bad. These are our false positives.

Insights from the confusion matrix

Now, that we have drawn our confusion matrix, let us see how can we put the four values to good use. They can be used to calculate the following metrics:

- Accuracy: The ratio of instances correctly classified of the total instances.

Formula:

(TP+TN)/(TP+TN+FP+FN) - Precision: The ratio of true positive instances out of all the instances classified

as positive by the classifier. Formula:

TP/(TP+FP) - Recall: (a.k.a sensitivity)The true (identified) positives as a ratio of

the total positives in the dataset.

Recall is also known as True Positive Rate or TPR. Formula:

TP/(TP+FN) - FPR (False Positive Rate): The False Positive Rate is the ratio of False Positives by

the total negatives. Formula:

FP/(FP+TN)or1-Specificity - Specificity: Specificity is the capability of the classifier to point out the

negatives from the data. It is the equivalent of recall for the negative class.

Formula:

TN/(TN+FP)

Additional learnings:

Two additional insights can be easily derived from the confusion matrix:

- Is there a class imbalance? Comparing

TP+FNtoFP+TNtells us if there is a class imbalance. - Odds ratio: Odds ratio tells us how much better our classifier is compared

to a dummy classifier making random guesses. A low odds ratio might lead you to

question the need for the classifier at all. Odds ratio is given by:

Recall/(1-Specificity).

Interpreting the metrics

Ordinarily, the ability for a classifier to perform well can be denoted by means of accuracy. For highly imbalanced datasets, accuracy is not a good measure of the classification. When accuracy is applied to an imbalanced dataset, the majority class predominates and gives high but misleading accuracy values. Precision and recall are more representative of the classifier performance.

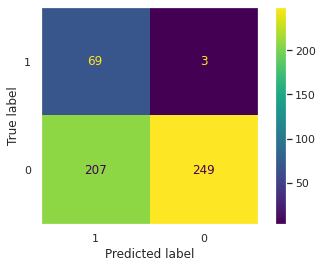

Confusion matrix in the wild

- If you look at a search engine as a classifier, it has relatively low precision and an unknown recall (why?). What saves it is the fact that it ranks results for us.

- In rare disease identification, recall or sensitivity is the primary measure of importance.

- In fraud detection, banks might insist on high precision for fear of false positives that might lead to honest customers being troubled.

- The legal system in a country might operate under the principle of ‘no innocent shall be punished’. This translates to high precision, but poor recall (indicated by the seemingly guilty getting away).

- A police blockade set up for identifying fugitives will work for near 100% recall and shall not be bothered about precision; in fact they might insist on checking each and every vehicle.

To see a confusion matrix in context, refer to this Kaggle notebook.

Finally, some intuition to remember the confusion matrix: The task of a classifier is to get as many positive instances as it can to the true positive side, while keeping as many negative instances from entering the false positive side.